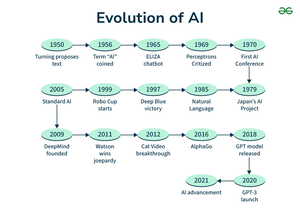

When Did AI Come Out? The Evolution of Artificial Intelligence from Theory to Reality

When Did AI Come Out? The Evolution of Artificial Intelligence from Theory to Reality

Artificial intelligence, once confined to science fiction and theoretical speculation, now powers critical functions across industries—ranging from healthcare and finance to transportation and creative industries. The timeline of AI’s emergence is not a single event but a layered chronology spanning over a century, anchored by breakthrough theories, experimental machines, and transformative technological milestones. When AI truly came into being—and began influencing real-world systems—requires examining key junctures where abstract concepts first crystallized into working systems capable of learning, reasoning, and decision-making.

The roots of modern artificial intelligence trace back to the mid-20th century, when foundational theories emerged. In 1943, mathematicians Warren McCulloch and Walter Pitts developed the first mathematical model of a neural network, laying the groundwork for machine learning. Their work demonstrated how artificial neurons could mimic basic human thought processes—a pioneering step in simulating cognition.

However, it wasn’t until 1950 that Alan Turing introduced the landmark idea of machine intelligence through his seminal paper, “Computing Machinery and Intelligence.” Turing posed a provocative question: “Can machines think?” and proposed what became known as the Turing Test—a benchmark for evaluating whether a machine’s behavior is indistinguishable from that of a human. This was not an invention, but a conceptual framework that defined the intelligence AI would strive to achieve.

The year 1956 marks the official birth of AI as a formal academic discipline.

The Dartmouth Conference, organized by computer scientists John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon, brought together researchers to explore the potential of machines to simulate human intelligence. It was here that the word “artificial intelligence” entered the lexicon for the first time, signaling a new era of collaborative experimentation. As McCarthy later reflected, “We thought ourselves exploring an area where the machine could be made to behave as if it were rational, and thus intelligent.” This moment crystallized AI’s identity—not as mere automation, but as systems capable of learning from data, adapting to new inputs, and solving complex problems.

The decades that followed were marked by cycles of expectation, progress, and renewal. In the 1960s and 1970s, early AI programs like ELIZA—created by Joseph Weizenbaum at MIT—simulated crude conversations with users, generating early fascination with machine interaction. Though ELIZA operated through pattern matching rather than genuine understanding, its success in fooling users revealed a critical insight: intelligence could be emulated, even in limited form.

Meanwhile, symbolic AI—focused on rule-based logic and knowledge representation—dominated research. Systems like DENDRAL, designed to deduce molecular structures from chemical data, achieved real-world impact, proving AI’s utility beyond theoretical debate.

The resurgence of AI began in earnest in the 1980s with the advent of machine learning and neural networks, though limited by computational power and data scarcity.

The rise of backpropagation algorithms in the late 1980s and early 1990s enabled neural networks to “learn” from vast datasets, reigniting interest in adaptive intelligence. Yet progress remained slow, hampered by what researchers called the “AI winter”—periods of reduced funding and diminished public confidence following unmet expectations. A turning point arrived in 2006, when Geoffrey Hinton, Yoshua Bengio, and others revived interest in deep learning through breakthroughs in training multi-layer neural networks.

Their work, initially met with skepticism, soon demonstrated that AI could achieve human-level performance on tasks once thought exclusive to biological minds.

Practical, real-world AI applications accelerated dramatically after 2010, driven by three critical forces: breakthroughs in hardware—particularly GPUs enabling rapid parallel computation; exponential growth in available data, especially with the rise of the internet, social media, and mobile devices; and advances in algorithmic design, such as convolutional neural networks for image recognition and transformers for natural language processing. In 2012, Hinton’s team achieved a watershed moment when their deep neural network surpassed human accuracy in image classification at the ImageNet competition.

This event marked the birth of modern AI—an era where machines not only process data but “understand” visual patterns with unprecedented precision.

Since then, AI has permeated nearly every sector. In healthcare, AI enables early disease detection from medical imaging, personalized treatment recommendations, and drug discovery accelerated by generative models.

Finance relies on AI for fraud detection, algorithmic trading, and risk assessment, processing millions of transactions in real time. Autonomous systems—ranging from self-driving cars to robotic process automation—now perform tasks that once required expert human judgment. Meanwhile, breakthroughs in large language models (LLMs), such as those powering today’s generative AI tools, demonstrate sophisticated capabilities in reasoning, conversation, and content creation, blurring the line between human and machine intelligence.

Defining the precise moment AI “came out” is impossible given its gradual evolution from theory to application. Rather than a singular debut, AI emerged through cumulative progress: from Turing’s vision, to early symbolic systems, through decades of setbacks and breakthroughs, culminating in the deep learning revolution. As Marcus Aurelius once wrote, “The obstacle on the path is not the obstacle; it’s the training.” Today’s AI systems are not just artifacts of computation—they are distilled training, refined through vast datasets, and shaped by human ingenuity.

This transformation underscores a sobering truth: when artificial intelligence stepped into practical reality, it did so not in a single lab or conference room, but across a history of bold ideas, incremental progress, and relentless pursuit of machine cognition.

The story of when AI came out is not one of instant triumph, but of persistent exploration—spanning over seventy years—culminating in systems that now augment, and in some cases surpass, human capabilities. From Turing’s foundational question to today’s intelligent assistants, AI’s journey reveals not just technological advancement, but a deeper evolution in how humanity imagines and builds intelligence beyond itself.

Related Post

Discovering the Allure of Onlyfans: The Rising Star Marlene Santana Captures Attention

Molette Green NBC4: A Life Shaped by Height, Age, and Spouse – The Full Profile

Comprehensive Guide to AJR Band Members’ Ages and Names: The Sans-D Lyon Strengths Behind the Sound

Solo Leveling Episode Release Times: Your Complete Guide to Staying Ahead