Unleashing Sound: How Music Search Transforms Discovery in the Digital Era

Unleashing Sound: How Music Search Transforms Discovery in the Digital Era

In a world where over 100 million tracks reside in streaming libraries, finding the perfect song amid endless options has evolved from a daunting quest into a seamless experience—driven primarily by intelligent music search engines. Platforms powered by advanced algorithms don’t just locate songs; they interpret moods, genres, and even cultural trends to deliver personalized sonic journeys.

Every tap, query, or skippage fuels a feedback loop that sharpens search precision, reshaping how listeners connect with music.

The core innovation behind modern music search lies in a blend of natural language processing, acoustic analysis, and user behavior data.

“Search is no longer just about keywords—it’s about understanding intent,” explains Dr. Elena Torres, music tech researcher at the Institute for Digital Audio Innovation. “By analyzing vocal tone, rhythm patterns, and user listening history, systems now predict what a listener might love before they articulate it.”

The Technology Behind the Search: How It All Comes Together

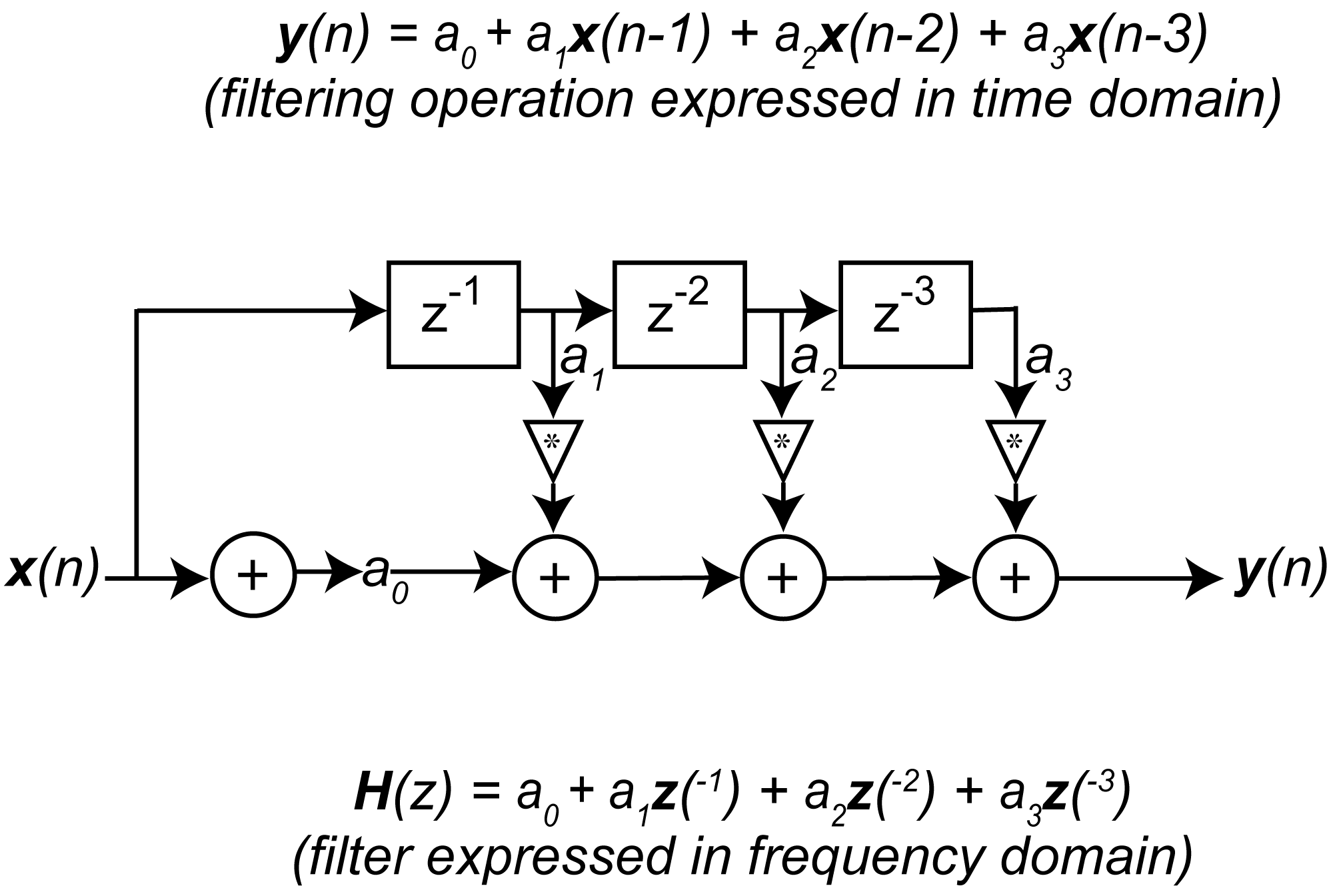

Music search engines operate through multiple layers of intelligent processing.At the foundation is acoustic fingerprinting—unique digital signatures derived from a song’s waveform, tempo, timbre, and key. When a user inputs a song title, lyrics, hum, or even hum-along, these features match against vast databases stored in high-resolution audio libraries.

Complementing this are semantic search algorithms that parse user queries beyond literal matches. Queries like “upbeat 80s synth pop” trigger semantic networks linking tempo, instrumentation, and era-specific patterns, not just exact titles.

Machine learning models refine these searches over time, reducing false hits and enhancing relevance with each interaction.

- Acoustic Matching: Each track’s sonic fingerprint ensures precise identification, even among similar-sounding songs.

- Semantic Understanding: Queries are interpreted contextually, grasping nuances like mood or decade.

- Behavioral Personalization: Listening history, skip patterns, and favorites shape future recommendations, creating tailored sonic profiles.

- Cross-Realm Learning: Integration with voice assistants, social media audio clips, and empower platforms expands search beyond traditional playback.

Redefining Discovery: From Random Listening to Intent-Driven Exploration

Gone are the days of endlessly scrolling through endless playlists in search of a starting point. Music search now facilitates intentional listening by bridging gaps between intent and discovery.A user humming a simple melody might land on a near-identical track within seconds; typing lyrics can pull deeper cuts, deep cuts, or even remixes overlooked by casual search.

Platforms leverage this behavioral data to surface not just popular hits, but hidden gems and emerging artists aligned with individual taste.

Artists and labels increasingly optimize for discovery through metadata tagging, genre hybridization, and acoustic uniqueness. For instance, a track blending indie folk with electronic elements may rank higher in semantic searches than purely labeled “indie solo.” This shift rewards innovation and diverse expression, making the ecosystem richer and more inclusive.

Human vs.

Algorithm: The Symbiosis Driving Modern Discovery While algorithms excel at pattern recognition and rapid data processing, human curation remains vital. Editorial playlists, genre experts, and cultural tastemakers guide discovery by embedding context—like explaining why a particular song resonates with current movements or regional sounds.

AI handles scale, cognition, and personalization; humans infuse meaning, emotion, and cultural insight. Together, they form a dynamic system where machine precision meets human intuition, elevating the listener experience far beyond mechanical search.

Global Reach and Cultural Amplification Through Search

Music discovery through search extends beyond borders. A listener in Tokyo can find Moroccan fusion jazz via keyword queries or vocal descriptors learned from global artists. Platforms now index multilingual lyrics, regional genres, and non-English vocal styles, breaking linguistic barriers.Conversely, global hits are filtered and localized through search relevance, aiding lesser-known tracks from emerging markets.

This cross-cultural exchange fuels a mosaic of sounds, enabling underrepresented genres—such as South African amapiano or Brazilian funk—to gain international traction through intelligent search.

The Future: Immersive Searches and Beyond Audio

Looking ahead, music search is poised to enter immersive realms.Emerging technologies integrate search with augmented reality, where visual cues accompany audio matches; voice-controlled smart environments that curate playlists by ambient mood; and AI-driven content creation adapting music generation to user queries.

Imagine searching for “songs that mirror morning calm” and receiving a dynamic, evolving soundtrack generated in real time—responsive to your heartbeat, time of day, and environment.

As these frontiers emerge, core principles remain: accuracy, personalization, and a deep commitment to connecting listeners with sound that matters. Music search has transcended its role as a simple locator—becoming a curator, a cultural bridge, and a personal companion in the vast ocean of audio.

Every search refines not just what we hear, but how we feel, remember, and share music in an ever-connected world.

Related Post

Map Any Richmond ZIP Code in Richmond, Virginia — Find Your Neighborhood Fast

The Foundation of Geometric Success: Unlocking All Things Algebra’s Geometry Basics Answer Key

Vanessa Bryant S Inspiring Weight Loss Journey: A Testament to Resilience That Transcends the Scale

Exploring The Relationship Of David Anders: Who Is His Partner?