IPython & Databricks: Where Interactive Computing Meets Scalable Data Power

IPython & Databricks: Where Interactive Computing Meets Scalable Data Power

In an era defined by data velocity and computational complexity, the integration of IPython with Databricks has emerged as a transformative force for data professionals. By bridging interactive computing with high-performance, collaborative analytics, this powerful synergy enables faster experimentation, seamless workflows, and scalable insights across engineering, science, and business teams. IPython’s native interactivity, fused with Databricks’ cloud-native data platforms, unlocks a new paradigm in data exploration and model development.

This guide dissects the integration’s architecture, practical use cases, and strategic advantages—proving why this pairing is rapidly becoming the gold standard in modern data workflows.

At its core, IPython—short for Integrated Python—delivers a rich, interactive environment ideal for iterative coding, visualization, and rapid prototyping. Its wide adoption spans Jupyter Notebooks, IPython Shell, and collaborative kernels, offering data scientists and engineers a responsive workspace to test hypotheses and debug code in real time.

Databricks, by contrast, provides a unified analytics platform built on Apache Spark, designed for large-scale data processing, machine learning, and collaborative data engineering. The integration transcends mere technical compatibility; it enables users to leverage Python’s versatility within Databricks’ distributed computing backbone, eliminating context switches between local IDEs and cloud infrastructure.

The technical architecture behind the integration

The integration hinges on three foundational pillars: seamless kernel connectivity, runtime interoperability, and ecosystem synergy. IPython kernels—particularly the Databricks-native Python kernel—run directly within the Databricks interface, allowing users to write code in familiar Jupyter environments while pipelines execute at Spark scale.This kernel bridge ensures low-latency communication, with input/output streams processed efficiently, preserving interactivity even when running thousands of iterative queries.

Behind the interface, Spark’s distributed execution model powers high-throughput data processing, enabling IPython’s notebook-based queries to scale from gigabytes to petabytes without code changes. Vegetable-like integration occurs between IPython’s interactive session lifecycle and Databricks’ scheduling, file handling, and versioning systems. Tools like Databricks Runtime (DBR) further embed Python execution engines, optimizing performance through native integration of libraries such as Pandas, NumPy, and Scikit-learn.

Practical applications: from experimentation to enterprise deployment

For data scientists and analysts, the IPython–Databricks fusion accelerates every stage of the analytics lifecycle.In exploratory data analysis (EDA), interactive notebooks allow rapid transformation and visualization of large datasets—concepts that would take hours in traditional environments now execute in minutes.

- Iterate on data pipelines with zero setup friction, using IPython’s live cell model

- Debug complex transformations visually, leveraging Matplotlib and Plotly withinすぐに recognizable notebook interfaces

- Execute SPARK SQL-like aggregations lazily, benefiting from optimized execution plans without writing low-level code

Collaborative model development in shared environments

One of the integration’s most transformative impacts lies in democratizing model development. Teams can co-edit notebooks in real time, share checkpoints via MLflow, and version experiments through Databricks Version Control—all while leveraging IPython’s interactive debugging capabilities. This removes silos between data engineers, scientists, and business analysts, fostering cross-functional collaboration.Consider a team building a recommendation engine: a data scientist writes and tests an algorithm in an IPython cell, evaluates precision/recall live, then deploys it via a Scala-based ML pipeline—all within a single, traceable workflow.

Best practices for maximizing integration

To harness the full potential, organizations should adopt these strategies: - **Embed IPython kernels in collaborative notebooks** with role-based access controls to maintain security and governance. - **Leverage caching and checkpointing** to speed iterative development—IPython’s live cell cache paired with Databricks’ stateful execution preserves progress across long runs. - **Integrate with Databricks Lakehouse architectural principles**, using Delta Lake and UNIFY table APIs to ensure data consistency between interactive sessions and production pipelines.- **Automate experiment tracking** via MLflow or Davate graphs, linking notebook executions to model lineage and metrics for audit readiness.

The integration of IPython and Databricks is not merely a technical convenience—it represents a paradigm shift in how data teams conceived, executed, and scaled analytics. By uniting interactivity, performance, and collaboration, it empowers users to move swiftly from curiosity to insight, experimentation to deployment.

As data volumes grow and computational demands intensify, this synergy sets a new benchmark for operational agility in data-driven organizations.

The future of data innovation

Looking forward, the convergence of IPython and Databricks will continue evolving, driven by advances in collaborative AI interfaces, real-time streaming analytics, and tighter MLOps integration. With sparse computing, enhanced kernels, and tighter security controls on the horizon, the platform is poised to enable even deeper exploration—turning complex data challenges into immediate, actionable breakthroughs

Related Post

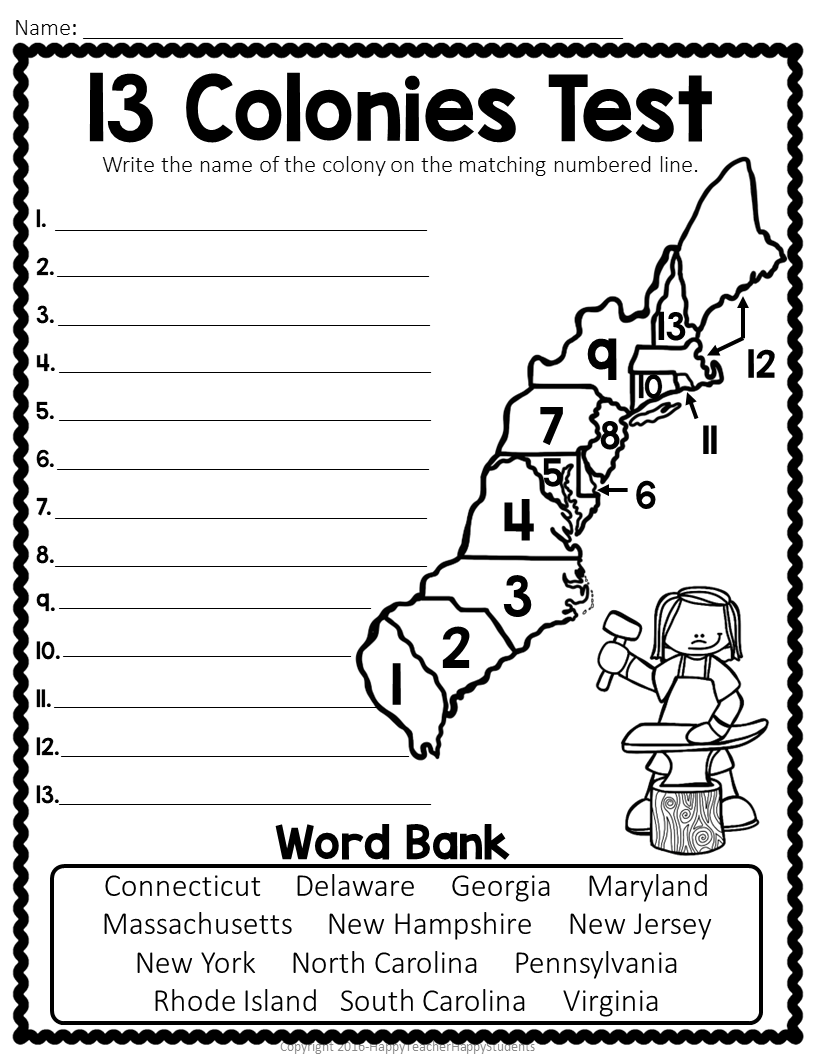

Mapping the Founding Spark: The 13 Colonies Map Blank Reveals America’s Birthplace

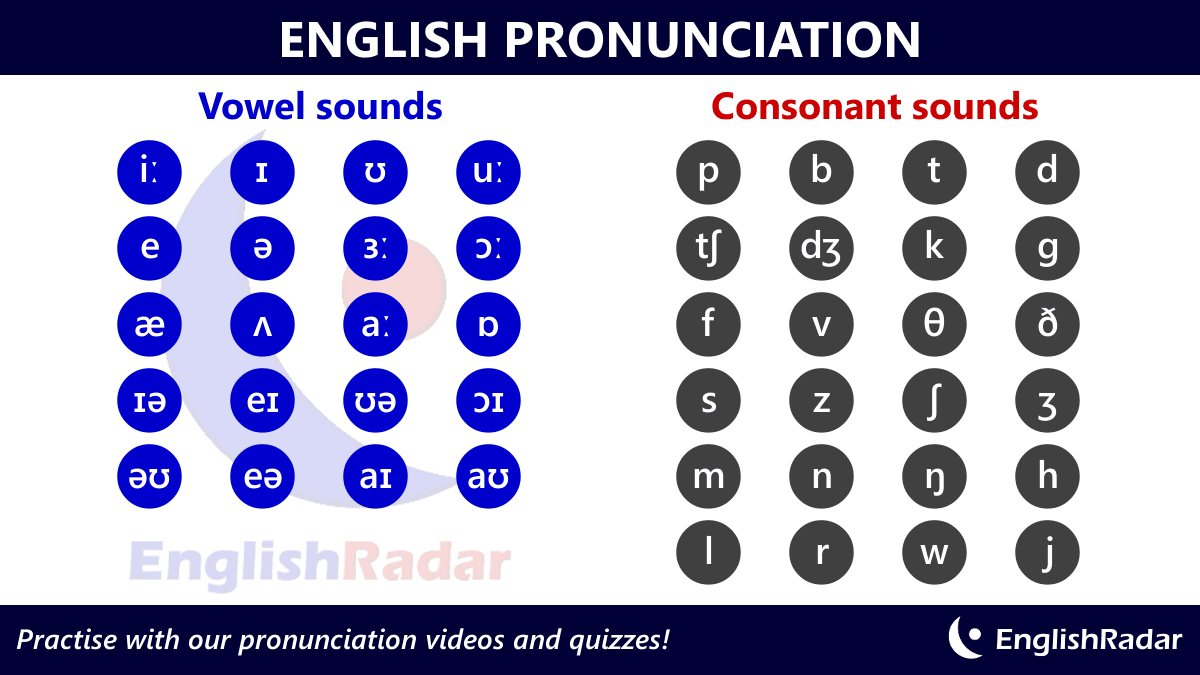

Mastering Vowel Pronunciation: How Long Sounds Transform French, English, and Beyond

Horton And The World Of Who: A Whimsical Animated Journey That Brings Whoabouts to Life

What Is a Walk-Off Home Run? The electrifying finish that lights up baseball stadiums